3. Getting started writing CHERIoT software

Now that you understand the abstract ideas in CHERIoT, you almost certainly want to start writing some real code. CHERIoT is a complete hardware-software platform, which means that you will need at least the following:

- A device or simulator that implements the ISA.

- A copy of the CHERIoT RTOS software to run on the device.

- A CHERIoT toolchain to compile and link the software.

The whole software stack, the ISA specification, and a reference implementation of a CHERIoT core are all open source and so these should be easy to acquire.

This is not the complete list of software in the CHERIoT ecosystem. For example, the auditing tool (see Chapter 10. Auditing firmware images) is separate and there are other open-source components maintained as part of the project.

3.1. Getting the RTOS source code

CHERIoT RTOS is developed on GitHub. This is also the home for the project's issue tracker, please report any bugs that you find there! You will need to clone this repository to get the latest version.

The RTOS has not quite reached 1.0 at the time of writing. After the 1.0 release, you should also be able to download the RTOS source as a release. Please see the README.md file in the repository for the latest instructions.

The RTOS repository uses git submodules for some third-party components. This means that you must do a recursive clone:

$ git clone --recursive https://github.com/CHERIoT-Platform/cheriot-rtos Cloning into 'cheriot-rtos'...

This will create a directory called cheriot-rtos. Inside this you will find a directory called sdk, which contains the SDK. There are also some examples and exercises to help you get started.

If you forgot to do a recursive clone, you can run the following command from the cheriot-rtos directory to initialise the submodules:

$ git submodule update --init --recursive

3.2. Using the CHERIoT development container

The CHERIoT project provides a development container, usually referred to as a dev container. This is an Open Container Initiative (OCI) container image that has all of the tools required for building CHERIoT software preinstalled. This includes the toolchain, auditing tools (see Chapter 10. Auditing firmware images) and some simulators (see Section 3.4. Choosing an implementation).

OCI containers are sometimes referred to as Docker Containers, because the standards evolved from the model supported by the Docker tools, but they are now supported by a range of software including containerd, podman, and so on. A container instance (often referred to simply as a container) is an isolated environment that is instantiated from a container image. The image is a filesystem built from a set of layers, which allows different containers to share on-disk (and in-cache) space for different images that share a common base.

Dev containers are intended to be used with an editor that supports them but can also be used directly as if they were any other kind of container. Most of what makes a container image a dev container is not part of the container image. The RTOS repository contains a .devcontainer/devcontainer.json file that describes how to find and use the image. This includes scripts to run when the container is created, editor plugins to install, and so on.

This means that, if you use an editor that supports dev containers directly, the experience is largely seamless. If you open the RTOS repository in Visual Studio Code and have the Dev Containers plugin installed, it will prompt you to reopen the repository in a container. This will then fetch the container for you, configure plugins for syntax highlighting, autocomplete, and so on.

If you're using Windows, you may find that git has mangled line endings or failed to create symbolic links (which require Developer Mode to be enabled). Visual Studio Code will offer an option to clone the repository in a new volume. Docker and Podman on Windows run containers in a Linux virtual machine and volumes are implemented as folders in the VM's filesystem, rather than as Windows folders mounted into the VM. You will probably find that this works better.

If, like me, your preferred development environment is a lightly modernised version of a 1970s minicomputer, you can still use the dev container. The container image is ghcr.io/cheriot-platform/devcontainer:latest. You can run this directly from the directory where you checked out the RTOS repository:

$ docker run --rm -it \ --mount \ source=$(pwd),target=/home/cheriot/cheriot-rtos,type=bind \ ghcr.io/cheriot-platform/devcontainer:latest

This command will create a single instance of the container with the current directory mounted as /home/cheriot/cheriot-rtos. This creates an ephemeral instance. You can create persistent instances with docker create.

In the dev container, all of the CHERIoT tools are installed in /cheriot-tools/bin/.

The dev container also exists to support GitHub Code Spaces. These run a Visual Studio Code instance in a browser, attached to a dev container deployed in an Azure VM. If you create a Code Space from the CHERIoT RTOS repository, it will be set up with everything that you need to develop for CHERIoT in the browser. GitHub Code Spaces are a good way to start playing with CHERIoT, but the free tier is limited to 120 CPU hours (60 hours on the smallest VM tier) so you will probably want to install the toolchain locally for serious development.

3.3. Setting up a development environment

Having a copy of the RTOS software does not enable you to build it. You will also need a toolchain: a compiler, linker, and other associated tools that can take source code and turn it into a firmware image that can run on a device. If are using the dev container, these tools are all installed for you.

The CHERIoT toolchain is based on LLVM, which used to stand for 'Low Level Virtual Machine' until it became clear that none of those words actually applied to the project and it is now just a name. LLVM is a generic set of building blocks for writing compilers, structured around the LLVM Intermediate Representation (LLVM IR). It includes a mature C/C++ front end, a component that transforms C and C++ (and Objective-C) into LLVM IR. This front end, clang, is the default compiler on Apple platforms, Android, and FreeBSD. LLVM also includes a linker, lld. These two are sufficient to turn source code into something that can run on a system. LLVM also provides some other components that are useful for development. For example, llvm-objdump is used to disassemble a binary, which is useful when you have some telemetry that tells you that you've taken a CHERI bounds exception at address 0xbaadc0de but you would quite like to know what that corresponds to in the source code where you might be able to fix the issue. It also includes llvm-objcopy, which is used on some targets to turn an Executable and Linkable Format (ELF) file into a raw stream of bytes to be loaded into memory.

The CHERIoT LLVM toolchain aims to upstream all of the CHERIoT support in mainline LLVM. We hope to have the majority of this work done in 2025, so by the time that you read this it's possible that a generic LLVM install will be sufficient. We expect to do new-feature development in the CHERIoT LLVM repository so you may prefer to use it even if upstream works.

You can build LLVM yourself, though it takes quite a lot of CPU time and memory. Make sure you have at least 10-20 GiB of disk space available if you want to do this. You will find instructions in the CHERIoT RTOS Getting Started guide. Generally, building the toolchain yourself is recommended only if you have software supply-chain concerns or if you are working on the toolchain. For everyone else, it's better to use a pre-built version from the dev container.

These days, a compiler is expected to do more than simply compile code. It is also expected to talk the language server protocol (LSP) and provide syntax highlighting, autocompletion, cross-referencing, and so on.

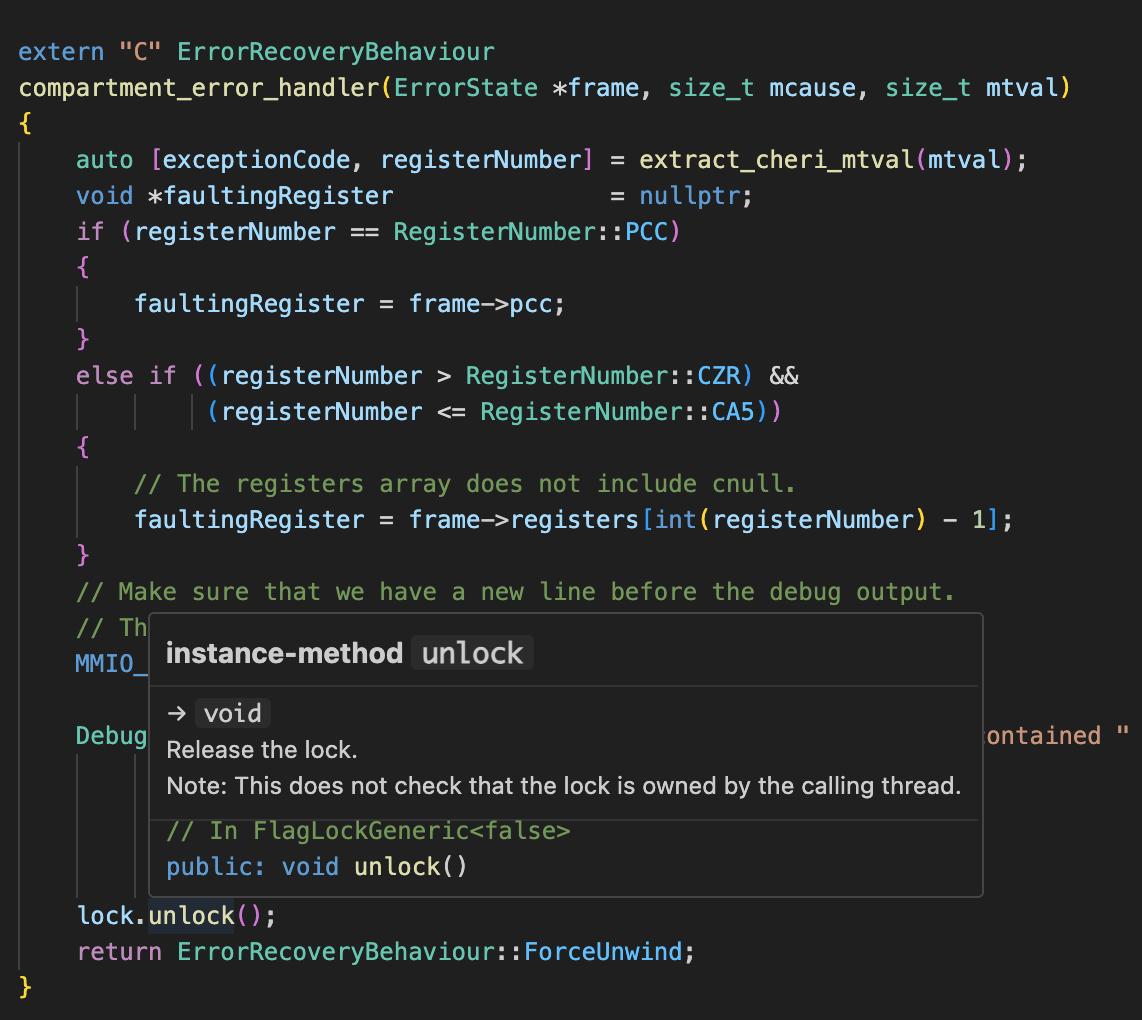

Figure 2. Visual Studio Code with clangd integration for syntax highlighting and cross-referencing.

The build system used by CHERIoT RTOS is intended to make this easy to support. Figure 2. Visual Studio Code with clangd integration for syntax highlighting and cross-referencing shows the result in Visual Studio Code. All of the CHERIoT-specific extensions (see Chapter 4. C/C++ extensions for CHERIoT) are correctly highlighted.

This support is not limited to Visual Studio Code. It can work with any editor that supports the language-server protocol. The parsing code from the clang front end is also part of the clangd dæmon, which implements the server part of this protocol.

The dev container includes a .vimrc that (if you install Vim) uses the Asynchronous Lint Engine plugin to connect to our clangd build. Simply run :PlugInstall in Vim to install it.

C and C++ are more complex than many languages for syntax highlighting and cross references. Consider even a simple hello-world C program, which starts with #include <stdio.h>. Where does stdio.h come from? That typically depends on command-line arguments passed to the compiler. For native compilation, there may be some good places to guess (such as /usr/include on *NIX platforms) but for cross compilation, this is harder. This gets more complex with code that references macros passed on the command line. Without knowing the command line for the compiler, the syntax highlighter can't even tell the type of these identifiers.

Clang works around this with a JSON compilation database. This is a JSON file that provides the command line used to compile each file. When clangd is asked to open a file, it searches up the directory tree until it finds a compile_commands.json file and uses it to determine how to open the file.

For CHERIoT, this will be different for each project. When you create the dev container for the first time from an editor that supports dev containers, it will run a script that generates these for the core RTOS and for the examples and exercises. If you launched the dev container yourself, you can run this script yourself. You will find it in scripts/generate_compile_commands.sh in the RTOS repository.

This script simply invokes xmake, which we'll see in more detail in Section 3.5. Building firmware images. For your own projects, run the following command:

$ xmake project -k compile_commands

This must be run after the xmake configure step, so that the build system knows how to build each file and is then able to communicate this to clangd.

3.4. Choosing an implementation

You should now have everything installed to be able to build a firmware image. The next step is to be able to run one. This means some implementation of the CHERIoT Instruction Set Architecture (ISA).

The CHERIoT ISA is another open-source project maintained as part of the CHERIoT Platform organisation. As with other modern instruction sets, this is formally specified in an ISA modelling language, in our case Sail. Sail (a name, not an acronym) can export to various theorem provers and has some built-in support for running SMT queries, which we use to check some properties about the ISA automatically. It can also generate a simulator. We use this simulator as our gold model: it is a reference implementation of how the architecture should behave and so can be used to compare behaviour across implementations.

Sail is one of several emulators or simulators included in the dev container. The CHERIoT-SAFE (Small And Fast FPGA Emulator) project is the testbed for the CHERIoT Ibex, the reference implementation of the CHERIoT ISA. Both of these projects are maintained by Microsoft. CHERIoT-SAFE can target the Arty A7 low-cost FPGA development board and also build a software simulation using verilator.

The CHERIoT Ibex is, at the time of writing, the only available core that supports the CHERIoT ISA, though we expect more to appear in the next few years. The Ibex is a three-stage in-order core, which is optimised for area, rather than performance. As part of the original CHERIoT research project, we also added the CHERIoT extensions for Flute, a RISC-V core implemented in BlueSpec. Flute was not production quality, but did demonstrate that a five-stage core that was (slightly) more optimised for performance could eliminate most of the CHERIoT-specific overhead. Ibex is expected to be slower than a similar-complexity non-CHERI microcontroller, but is only very slightly larger.

Google has also contributed an emulator based on their MPACT simulation environment. MPACT is intended for integration with Renode for simulating complex SoCs. Google has created a clean-slate implementation of the CHERIoT ISA in this. This is currently, by quite a large margin, the fastest of the available simulators or emulators. The Sail model is directly translated from the formal model and typically manages 200–400 KIPS (thousand instructions per second) on a fast machine. The SAFE simulator is a cycle-accurate simulation of a chip and is typically a bit over 50% of the performance of Sail. The MPACT simulator can usually manage over 5 MIPS, at least an order of magnitude faster than Sail.

Beyond software simulators there are currently two mature options for FPGA simulation. The SAFE project, as previously mentioned, can be run on the Arty A7. Unfortunately, Microsoft does not provide FPGA bitfiles so you must build this yourself.

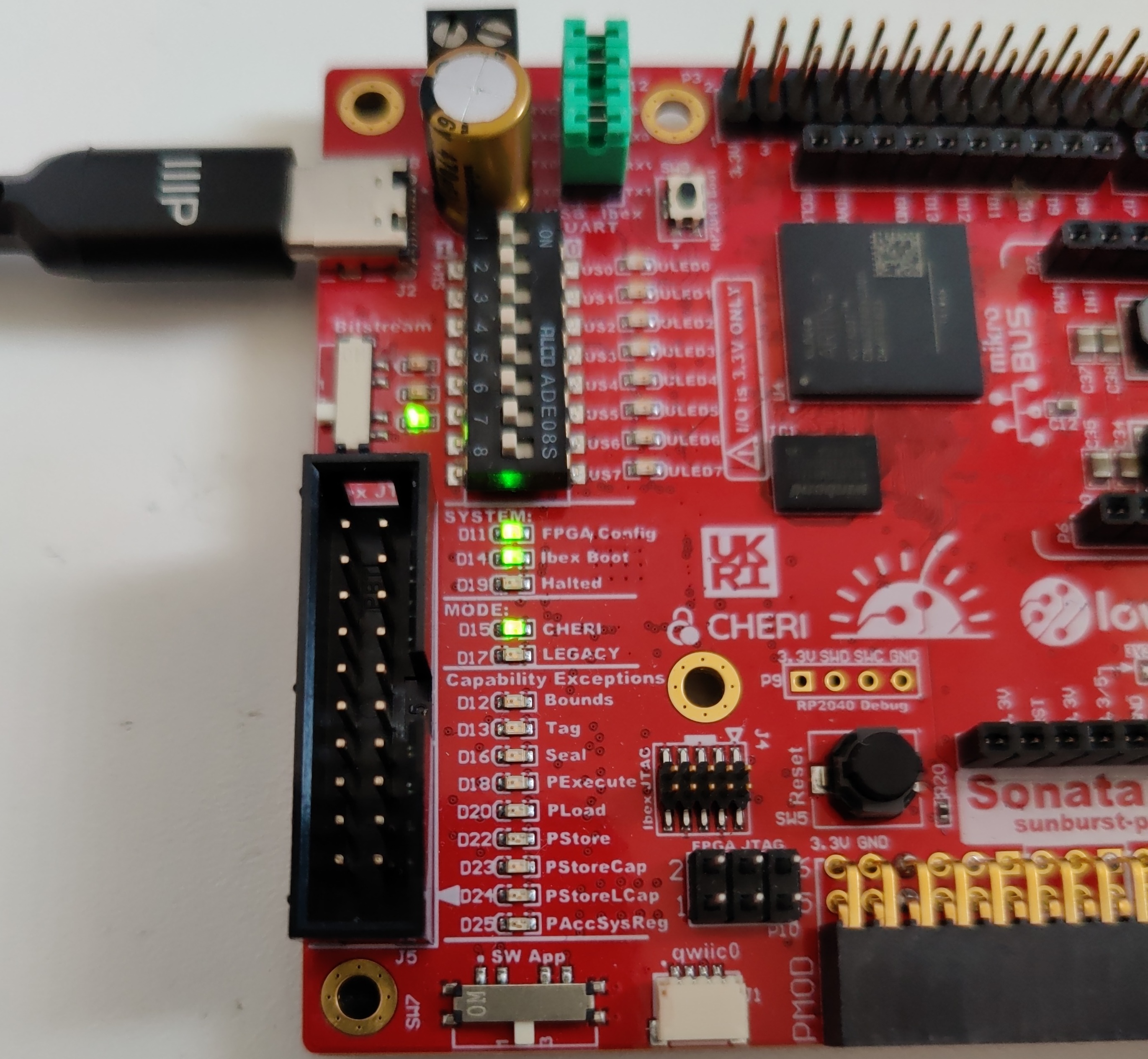

lowRISC has produced an FPGA development board designed specifically for CHERIoT, using a slightly smaller version of the same FPGA as the Arty A7. This has a rich set of peripherals, including an LCD display. It also has set of LEDs that can display CHERI exceptions directly for the CHERIoT Ibex core, as shown in Figure 3. CHERIoT exception LEDs on the Sonata FPGA development board. These will glow red and gradually fade when a CHERIoT-specific exception is triggered in software.

Figure 3. CHERIoT exception LEDs on the Sonata FPGA development board.

At the time of writing, there are not yet any CHERIoT chips commercially available. SCI Semiconductor has announced their ICENI line of CHERIoT microcontrollers, the first of which should be available in 2025.

3.5. Building firmware images

CHERIoT RTOS uses the xmake build system. Xmake is a build system implemented in Lua. It was chosen because it is easy to add new kinds of build targets.

In a typical system that uses the compile-link process invented by Mary Allen Wilkes in the 1960s, you compile source files to object code and then link object code to produce executables. You may have an intermediate step that produces libraries.

The CHERIoT build process was designed to enable separate compilation and binary distribution of components. Each source file is compiled either for use in a shared library or for use in a specific compartment. This means that, when building compartments, the compiler invocation must know the compartment in which the object file will be used.

Next, compartments and libraries are linked. This requires a special invocation of the linker that produces a relocatable object file with the correct structure. At this point, the only exported symbols are those for exported functions and the only undefined symbols should be those for MMIO regions or exports from other compartments (see Chapter 5. Compartments and libraries for more information).

The build system produces a .library or .compartment file for each shared library and each compartment.

In theory, these can be distributed as binaries and linked into a firmware image but this is not yet handled automatically by the build system.

The final link step produces a firmware image. It also produces the JSON report that describes all cross-compartment interactions and is used for auditing.

Using the RTOS build system involves writing an xmake.lua file that describes the build. This starts with some boilerplate:

set_project("CHERIoT example")sdkdir = os.getenv("CHERIOT_SDK") or"../../cheriot-rtos/sdk/"includes(sdkdir)set_toolchains("cheriot-clang")

Listing 1. Build system code for importing the CHERIoT RTOS SDKexamples/hello_world/xmake.lua

The set_project call gives a name to the project.

Lines 6–8 import the RTOS SDK. This first tries to use the CHERIOT_SDK environment variable and, if not, tries a relative file. The sdkdir variable should point to the location of the sdk directory from the RTOS repository. Finally, line 10 selects the CHERIoT toolchain. Ideally this line would not be needed, but xmake's scoping rules require it to be provided here.

This boilerplate snippet will exist at the top of most xmake.lua files for CHERIoT. Only the name of the project (and possibly the path to the SDK) will be different.

The SDK file provides rules for building the various kinds of CHERIoT components (compartments, libraries, and firmware) and also includes all of the libraries that are part of the RTOS. These libraries include the core definitions for a freestanding C implementation (memcpy and friends), the atomic helpers for cores without atomic instructions, and the C runtime things that are called from compiler builtins. See the lib directory in the SDK for a full list.

If you want your firmware built to support running on more than one CHERIoT implementation then you will typically want to expose a build-configuration option that selects the target board, as shown in Listing 2. This exposes a --board option at the configure stage.

option("board")set_default("sail")

Listing 2. Build system code for allowing the board to be selected at configure timeexamples/hello_world/xmake.lua

You can set a default and we use "sail" here for the simulator build from our Sail formal model of the ISA. This refers to a board description file (see Chapter 12. Adding a new board). If you're usually targeting a particular hardware platform, setting the default here allows users to avoid specifying it manually on every build. If you're always targeting a particular hardware platform then you can avoid this entirely.

Next, you need to add any compartments and libraries that are specific to this firmware image. In most cases, you can do this in just two lines, the first providing the name of the compartment and the second providing the list of files, as shown in Listing 3. For this example, we'll have two compartments. One is our entry point, the other is a function that we'll use as a simple example of a cross-compartment call.

-- An example compartment that we can callcompartment("example_compartment")add_files("compartment.cc")-- Our entry-point compartmentcompartment("hello")add_files("hello.cc")

Listing 3. Build system code for building compartmentsexamples/hello_world/xmake.lua

The name of the compartment in the xmake.lua must match the name used for the exported function as described in Section 4.1. Exposing compartment entry points. If they do not match, the compiler will raise an error that a function is defined in the wrong compartment.

This example is going to make a cross-compartment call from the "hello" compartment to the "example_compartment" compartment and then print the result using printf, which is provided by the stdio library from the RTOS. The cross-compartment call is exposed from compartment.hh as shown in Listing 4. The only difference between this and a normal C/C++ function prototype is the __cheriot_compartment macro. This is explained in detail in Section 4.1. Exposing compartment entry points.

/*** Example of a function in a compartment.*/__cheriot_compartment("example_compartment") int exported_function(void);

Listing 4. Exporting a function for use by other compartmentsexamples/hello_world/compartment.hh

The implementation of this function is trivial (see Listing 5), it just returns 42. Note that, aside from the annotation from the function prototype, we don't need any changes to expose this for use from other compartments. The same is true on the caller's side, as shown in Listing 6. Functions exported from a compartment are called just like any other C function.

#include "compartment.hh"int exported_function(){return 42;}

Listing 5. A trivial implementation of an exported functionexamples/hello_world/compartment.cc

/// Thread entry point.void __cheriot_compartment("hello") entry(){printf("compartment returned %d\n", exported_function());}

Listing 6. A simple compartment entry point that does a cross-compartment callexamples/hello_world/hello.cc

The entry function is also annotated as a function exported from a compartment. This is because it's a thread entry point, a function that is called at the start of a thread. In CHERIoT RTOS, threads are statically defined. This is described in more detail in Chapter 6. Communicating between threads.

Returning to the build system, Listing 7 shows how the firmware block defines everything that's combined together to create a firmware image. First, the add_deps lines are defining the compartments and libraries that are linked. The first add_deps adds two libraries provided by the RTOS, implementing the core functions for a freestanding C environment and a minimal subset of stdio.h functions, respectively. The next add_deps adds the two compartments that we defined earlier.

Not all of the metadata that we set can be defined in the declarative syntax of xmake, and so we have to implement a function using the on_load hook to set the remaining properties. The "board" property is set from the option that we declared. This is where, if you don't need to support multiple targets, you could directly specify the board that you wish to target.

-- Firmware image for the example.firmware("hello_world")-- RTOS-provided librariesadd_deps("freestanding", "stdio")-- Our compartmentsadd_deps("hello", "example_compartment")on_load(function(target)-- The board to targettarget:values_set("board", "$(board)")-- Threads to selecttarget:values_set("threads", {{compartment = "hello",priority = 1,entry_point = "entry",stack_size = 0x400,trusted_stack_frames = 2}}, {expand = false})end)

Listing 7. Build system code for linking the final firmware imageexamples/hello_world/xmake.lua

The "threads" property is set to an array (as a Lua array literal) of thread descriptions. Each thread must set five properties:

- compartment

- The compartment in which this thread starts.

- priority

- The priority of this thread. Higher numbers indicate higher priorities.

- entry_point

- The name of the function for this thread's entry point. This must be a function that takes and returns void, exported from the compartment specified by the compartment key.

- stack_size

- The number of bytes of stack space that this thread has allocated.

- trusted_stack_frames

- The number of trusted stack frames. Each cross-compartment call pushes a new frame onto this stack and so this defines the maximum cross-compartment call depth (including the entry point) for this thread.

3.6. Running firmware images

Many of the board targets provide a run command. This is simple for simulators: it runs the simulator.

If you have built the example from the last section then you can run it simply with xmake run, like this:

$ xmake run Running file hello_world. ELF Entry @ 0x80000000 tohost located at 0x800061e0 compartment returned 42 SUCCESS

The current version of xmake does not automatically build the target so it's good to get into the habit of using xmake && xmake run, which will build (if necessary) before running. This is expected to be changed in a future version of xmake.

In some cases, these commands may depend on external configuration. For example, Sonata has a nice mBed-inspired loader that runs on a Raspberry Pi 2040, which configures the FPGA and loads firmware images. This exposes the flash filesystem so that you can just copy a firmware file into the SONATA device and the 2040 will reboot the FPGA and load the firmware. The run script provided for Sonata looks for the SONATA device in some common mount locations and, if that fails, simply prints the location of the file and tells you to copy it yourself.

If you are working in the dev container, the host filesystems are not automatically available and must be explicitly added. You can add extra mount locations to the .devcontainer/devcontainer.json file. If you're on macOS, the SONATA filesystem will be mounted in /Volumes, so you can add the following snippet (in the top-level object in the JSON file) to expose it to the container:

"mounts": [

"source=/Volumes/SONATA,target=/mnt/SONATA,type=bind"

]

On other operating systems, modify the source part to the correct location. This should prompt for the dev container to be restarted, which is required for new mount points to take effect.

If you are running the dev container directly, you will need to add this instruction directly to the invocation of docker or podman. For example, from the cheriot-rtos directory:

$ docker run -it --rm \ --mount source=$(pwd),target=/cheriot-rtos,type=bind \ --mount source=/Volumes/SONATA/,target=/mnt/SONATA,type=bind \ ghcr.io/cheriot-platform/devcontainer:latest /bin/bash

Either of these approaches will mount the SONATA filesystem as /mnt/SONATA, where the run script for Sonata can find it.

On Windows, Docker containers run in WSL2, which is a specialised Hyper-V virtual machine. Host folders are exposed via 9p over VirtFS. It appears that this is either too slow, or lacks the correct sync commands, for writes to the Sonata flash storage to be reliable from Docker on Windows. Docker and Podman both work reliably for Sonata on Linux and macOS.

The run command typically provides a convenient default. Some simulators provide various options if you invoke them directly. For example, both the Sail and SAFE simulators provide instruction-level tracing.

The Sail simulator is installed in the dev container as /cheriot-tools/bin/cheriot_sim. This will directly run an ELF binary, so you can recreate the behaviour of the xmake run command like this:

$ cheriot-tools/bin/cheriot_sim build/cheriot/cheriot/release/hello_world Running file hello_world. ELF Entry @ 0x80000000 tohost located at 0x800061e0 compartment returned 42 SUCCESS

If you add the --trace flag, you will get a lot more output. This enables all possible tracing. Every memory access, every register update, and every executed instruction will be traced. You can select a subset of this by providing an argument to --trace=. For example, passing --trace=instr will trace only instructions. The most useful option here is --trace=exception. This will provide a line of output for exceptions, which includes the address of the faulting instruction. This is very useful for finding out where CHERI exceptions have happened.

If you use xmake run to run a simulator then it will run only the simulator that the firmware image was built for. If you invoke a simulator directly, you will not get this check. Most targets have sufficiently different memory layouts that you cannot use the same firmware image between them.

The SAFE simulator is built with Verilator, which requires tracing to be enabled or disabled as a compile-time option. The dev container therefore installs two versions cheriot_ibex_safe_sim and cheriot_ibex_safe_sim_trace. Unlike the Sail simulator, this cannot simply run an ELF file, it needs a VHX file for each memory containing a hex dump of the initial contents of that memory. The run script for SAFE first creates this and then invokes the simulator. The scripts/ibex-build-firmware.sh script takes the ELF file as an argument and then creates the firmware directory containing the two required VHX files. The simulator expects a firmware directory to exist in the current directory and does not take any arguments.

For both simulators, tracing provides a lot of output and redirecting this to a file may be useful.

The MPACT simulator also provides an interactive mode, enabled with -i. This provides a debugging environment. You can use help inside the interactive mode to see the commands, which include breakpoints, watchpoints, and so on.